TL:DR Successive, well intentioned, changes to architecture and technology throughout the lifetime of an application can lead to a fragmented and hard to maintain code base. Sometimes it is better to favour consistent legacy technology over fragmentation.

An ‘anti-pattern’ describes a commonly encountered pathology or problem in software development. The Lava Layer (or Lava Flow) anti-pattern is well documented (here and here for example). It’s symptoms are a fragile and poorly understood codebase with a variety of different patterns and technologies used to solve the same problems in different places. I’ve seen this pattern many times in enterprise software. It’s especially prevalent in situations where the software is large, mission critical, long-lived and where there is high staff turn-over. In this post I want to show some of the ways that it occurs and how it’s often driven by a very human desire to improve the software.

To illustrate I’m going to tell a story about a fictional piece of software in a fictional organisation with made up characters, but closely based on real examples I’ve witnessed. In fact, if I’m honest, I’ve been several of these characters at different stages of my career. I’m going to concentrate on the data-access layer (DAL) technology and design to keep the story simple, but the general principles and scenario can and do apply to any part of the software stack.

Let’s set the scene…

The Royal Churchill is a large hospital in southern England. It has a sizable in-house software team that develop and maintain a suite of applications that support the hospital’s operations. One of these is WidgetFinder, a physical asset management application that is used to track the hospital’s large collection of physical assets; everything from beds to CT scanners. Development on WidgetFinder was started in 2005. The software team that wrote version 1 was lead by Laurence Martell, an developer with may years experience building client server systems based on VB/SQL Server. VB was in the process of being retired by Microsoft, so Laurence decided to build WidgetFinder with the relatively new ASP.NET platform. He read various Microsoft design guideline papers and a couple of books and decided to architect the DAL around the ADO.NET RecordSet. He and his team hand coded the DAL and exposed DataSets directly to the UI layer, as was demonstrated in the Microsoft sample applications. After seven months of development and testing, Version 1 of WidgetFinder was released and soon became central to the Royal Churchill’s operations. Indeed, several other systems, including auditing and financial applications, soon had code that directly accessed WidgetFinders database.

Like any successful enterprise application, a new list of requirements and extensions evolved and budget was assigned for version 2. Work started in 2008. Laurence had left and a new lead developer had been appointed. His name was Bruce Snider. Bruce came from a Java background and was critical of many of Laurence’s design choices. He was especially scornful of the use of DataSets: “an un-typed bag of data, just waiting for a runtime error with all those string indexed columns.” Indeed WidgetFinder did seem to suffer from those kinds of errors. “We need a proper object-oriented model with C# classes representing tables, such as Asset and Location. We can code gen most of the DAL straight from the relational schema.” He asked for time and budget to rewrite WidgetFinder from scratch, but this was rejected by the management. Why would they want to re-write a two year old application that was, as far as they were concerned, successfully doing its job? There was also the problem that many other systems relied on WidgetFinder’s database and they would need to be re-written too.

Bruce decided to write the new features of WidgetFinder using his OO/Code Gen approach and refactor any parts of the application that they had to touch as part of version 2. He was confident that in time his Code Gen DAL would eventually replace the hand crafted DataSet code. Version 2 was released a few months later. Simon, a new recruit on the team asked why some of the DAL was code generated, and some of it hand-coded. It was explained that there had been this guy called Lawrence who had no idea about software, but he was long gone.

A couple of years went by. Bruce moved on and was replaced by Ina Powers. The code gen system had somewhat broken down after Bruce had left. None of the remaining team really understood how it worked, so it was easier just to modify the code by hand. Ina found the code confusing and difficult to reason about. “Why are we hand-coding the DAL in this way? This code is so repetitive, it looks like it was written by an automation. Half of it uses DataSets and the other some half baked Active Record pattern. Who wrote this crap? If you hand code your DAL, you are stealing from your employer. The only sensible solution is an ORM. I recommend that we re-write the system using a proper domain model and NHibernate.” Again the business rejected a rewrite. “No problem, we will adopt an evolutionary approach: write all the new code DDD/NHibernate style, and progressively refactor the existing code as we touch it.” Many months later, Version 3 was released.

Mandy was a new hire. She’d listened to Ina’s description of how the application was architected around DDD with the data access handled by NHibernate, so she was surprised and confused to come across some code using DataSets. She asked Simon what to do. “Yeah, I think that code was written by some guy who was here before me. I don’t really know what it does. Best not to touch it in case something breaks.”

Ina, frustrated by management who didn’t understand the difficulty of maintaining such horrible legacy applications, left for a start-up where she would be able to build software from scratch. She was replaced by Gordy Bannerman who had years of experience building large scale applications. The WidgetFinder users were complaining about it’s performance. Some of the pages took 30 seconds or more to appear. Looking at the code horrified him: Huge Linq statements generating hundreds of individual SQL requests, no wonder it was slow. Who wrote this crap? “ORMs are a horrible leaky abstraction with all kinds of performance problems. We should use a lightweight data-access technology like Dapper. Look at Stack-Overflow, they use it. They also use only static methods for performance, we should do the same.” And so the cycle repeated itself. Version 4 was released a year later. It was buggier than the previous versions. Gordy had dismissed Ina’s love of unit testing. It’s hard to unit test code written mostly with static methods.

Mandy left to be replaced by Peter. Simon introduced him to the WidgetFinder code. “It’s not pretty. A lot of different things have been tried over the years and you’ll find several different ways of doing the same thing depending on where you look. I don’t argue, just get on with trawling through the never ending bug list. Hey, at least it’s a job.”

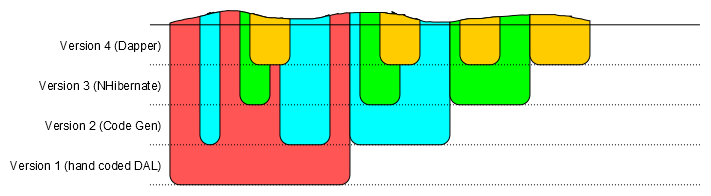

This is a graphical representation of the DAL code over time. The Y-axis shows the version of the software. It starts with version one at the bottom and ends with version four at the top. The X-axis shows features, the older ones to the left and the newer ones to the right. Each technology choice is coloured differently. red is the hand-coded RecordSet DAL, blue the Active Record code gen, green DDD/NHibernate and Yellow is Dapper/Static methods.

Each new design and technology choice never completely replaced the one that went before. The application has archaeological layers revealing it’s history and the different technological fashions taken up successively by Laurence, Bruce, Ina and Gordy. If you look along the Version 4 line, you can see that there are four different ways of doing the same thing scattered throughout the code base.

Each successive lead developer acted in good faith. They genuinely wanted to improve the application and believed that they were using the best design and technology to solve the problem at hand. Each wanted to re-write the application rather than maintain it, but the business owners would not allow them the resources to do it. Why should they when there didn’t seem to be any rational business reason for doing so? High staff turnover exacerbated the problem. The design philosophy of each layer was not effectively communicated to the next generation of developers. There was no consistent architectural strategy. Without exposition or explanation, code standing alone needs a very sympathetic interpreter to understand its motivations.

So how should one mitigate against Lava Layer? How can we approach legacy application development in a way that keeps the code consistent and well architected? A first step would be a little self awareness.

We developers should recognise that we suffer from a number of quite harmful pathologies when dealing with legacy code:

- We are highly (and often overly) critical of older patterns and technologies. “You’re not using a relational database?!? NoSQL is far far better!” “I can’t believe this uses XML! So verbose! JSON would have been a much better choice.”

- We think that the current shiny best way is the end of history; that it will never be superseded or seen to be suspect with hindsight.

- We absolutely must ritually rubbish whoever came before us. Better still if they are no longer around to defend themselves. There’s a brilliant Dilbert cartoon for this.

- We despise working on legacy code and will do almost anything to carve something greenfield out of an assignment, even if it makes no sense within the existing architecture.

- Rather than try to understand legacy code, how it works and the motivations that created it, we throw up our hands in despair and declare that the whole thing needs to be rewritten.

If you find yourself suggesting a radical change to an existing application, especially if you use the argument that, “we will refactor it to the new pattern over time.” Consider that you may never complete that refactoring, and think about what the application will look like with two different ways of doing the same thing. Will this aid those coming after you, or hinder them? What happens if your way turns out to be sub-optimal? Will replacing it be easy? Or would it have been better to leave the older, but more consistent code in place? Is WidgetFinder better for having four entirely separate ways of getting data from the database to the UI, or would it have been easier to understand and maintain with one? Try and have some sympathy and understanding for those who came before you. There was probably a good reason for why things were done the way they were. Be especially sympathetic to consistency, even if you don’t necessarily agree with the design or technology choices.

34 comments:

You mean this dilbert:

http://www.dilbert.com/strips/comic/2013-02-24/?CmtOrder=Rating&CmtDir=DESC

That's the one. "Are yo coming to the 'Code Mocking'" :) Classic!

" architect the DAL around the ADO.NET RecordSet" - I think you meant "DataSet"

Absolutely agree. Perhaps this should be called the bastardised design anti-pattern. I have seen this many times. This is a problem accentuated by the Agile continuous improvement mindset. E.g "this code still isn't testable, but all of this extra introduced complexity moves us a step closer to where the code can be made testable one day, so its essential that we keep doing this"

I do feel that there is no easy solution to this. None of us know what is going to happen in the future so we aren't to know whether our refactoring efforts will ultimately prove fruitful or not. As you alluded to, a big part of the problem is developers moving on and getting replaced.

I think this is an area where Agile helps. That is if the company places enough emphasis on the team. With a strong team, it doesn't matter if a developer leaves and is replaced, because anyone else in the team can pick up and carry on. And although and good team can pivot in business terms, architecturally speaking the team should design a flexible system that gradually improves over the long term.

One other point is this is very much linked with "resume driven development", for example as described here: http://www.bitnative.com/2013/02/26/are-you-a-resume-driven-developer/

Most of the high paying jobs tend to specialise in new technologies. This is much to do with supply and demand, with the majority of developers not having the experience of the new tech pushing up demand for the developers that do.

But this can also be a vicious cycle with developers flocking to new technology that is only very marginally better than the previous technology and actual worse until all of the potential pitfalls are understood and appreciated.

It's a hazard inherent in our industry, but it also makes the industry a lot more interesting. Often best advice or best practice proves to be wrong. Exhibiting good judgement is a lifetime's endeavour.

Duncan, thanks, you're right I did mean DataSet. Confused again by legacy data containers :p

It seems that this mindset also comes from engineers that latch onto new languages and frameworks as if they suddenly make hard problems simple to solve.

Maybe they do in trivial situations or if your team consists entirely of expert coders but more often than not your rewrites are working around problems and generating a new set of problems on top of that.

This is related to 'second system syndrome' which has a good write up by Joel Spolsky here ...

The idea that new code is better than old is patently absurd. Old code has been used. It has been tested. Lots of bugs have been found, and they've been fixed. There's nothing wrong with it. It doesn't acquire bugs just by sitting around on your hard drive.

I wonder if a full suite of end-to-end acceptance tests would have saved this application, allowing the developers to actually replace the older code.

Mike,

I completely agree with this, and have seen the very same situation occurring many times throughout my own 20 years+ career.

I've been both the well-intentioned developer who tried to "fix" the existing code to use what I genuinely believed was a better technology to solve the problem at hand, and I've also been the developer who's had to come into a project some way down the line to deal with the mess that is multiple competing approaches or technologies trying to solve the same problem.

Whilst it's true that consistency within the entire codebase is very often preferable to having the complexity of multiple (different) solutions to the same problem scattered around, it's also true that over time newer technologies emerge that genuinely do solve a given problem in a way that is far better than what has gone before. The key is being able to spot and understand that, when a new approach or technology is being proposed, does this genuinely solve the problem in a better way, or just a different way?

What I'd *really* like to hear would be solutions to how to solve this problem of multiple approaches over time muddying the code-base, or better yet, how do we prevent this from happening in the first place?

It's a difficult problem. That is partly a good thing as it keeps the likes of me gainfully employed :-).

What I would propose is that where possible new features be implemented in separate smaller applications/sub-systems. It would potentially mean end-users using more than one tool but perhaps a more tolerable solution. This is assuming that you could use the same data store. Even if that were not possible perhaps having clear integration points would also enforce the separation of concerns, technology, development approach, etc.

This was a great article about how agile software is built!

...oh ... OH

I know this was part of your story but one sentence i really have problems with is: "It’s hard to unit test code written mostly with static methods." This is absolutely 100% entirely false. Static methods are trivial to test and infact the easiest of all code to test as long as you follow the SRP. The one crutch you may made need is to reveal internals to the tests for them to initialize state or to spy on the state bag.

Chris, you can't mock a static class. At least in a language like C#. If you can, it's not trivial.

@Sam Leach right and you don't need to mock the static class. you may however need to set the internal state to a known state.

If a static method is PURE it's simply input/output testing. Since PURE methods wouldn't really be relevant to your scenario, the static class would be ultimately interacting with a single field, a list, or dictionary. By forcing that state to a known condition you "mocked" it.

Now suppose your static class actually calls something else, such as a DataTable. Something you can't easily modify. You replace the direct call with a Func or Action, then overwrite the Func/Action in the tests. This is effectively adding the virtual keyword to static methods.

This whole thing sounds very familiar - I would hazard to say that the Lava Layer anti-pattern is in fact the dominant pattern when it comes to bespoke line-of-business system development, especially when it happens in an organization whose primary source of revenue is not software.

Having been in this situation personally multiple times, I now have a different perspective on the whole thing. The problem isn't so much a technical one as it is a philosophical one. The trick is to change your perspective - stop thinking about technology and start thinking about value; that is: value delivered to the customer. Of course, this is hard, but ultimately it really is the only way to address the issue in a sustainable way such that everyone (implementation and maintenance team and customer) benefits.

From a practical point of view, this means biting your tongue and just dealing with the "nasty" legacy stuff if you happen to be a purist, and address changes to the code from the perspective of someone most interested in delivering value in the short, medium and long terms. As a tech lead of a team dealing with a product like this, if you can't deal with even looking at hand-crafted DAL code (as an example) without feeling nauseous and you find yourself experiencing a strong emotional reaction to this sort of thing ("replace it all!"), have the maturity to appreciate that you might not be at the point where you should be leading a team like this - right now, that is.

I know what it's like to be that purist, because I've been there; I'm sure we all have. Whether or not you're using an ORM (full or otherwise) isn't the issue though; the issue is whether or not technology choices are in the best interests of the customer - in saying this, it's important to remember that using an architecture or technology that makes life easier for the implementation team is also something that is in the interests of the customer - it's just a question of degree.

The most critical (and most difficult) component of the value-centered approach is getting the customer to appreciate this way of doing things. It may sound like a no-brainer, trying to convince somebody that you'd like to modify your approach in order to be better at realizing value for the business - but actually it is very difficult - that's because the customer is more often than not concerned with tactical issues (you're interested in the wood, but they're concerned with the trees).

I think that you (and the articles you reference) are describing several different things. I wrote it up but it was too long to post here so I stuck it on my own blog.

I have comments turned off (I think) for SPAM reasons.

Could there also a relationship between this anti-pattern and competency debt?http://www.leanway.no/competence-debt/

From personal experience it was harder to be sympathetic to code I didn't write nor understand. I tended to see the areas for inprovement, which if were incomplete, likely in a large enough code base you don't fully understand, leads exactly to the kind of inconsistency you illustrate above.

Good write-up. I've been observing this phenomenon for years and I think that one of the main causes is poor cost estimation by developers (or, often, no cost estimation).

The new project lead asks management to allow him to re-write the project. It's a 10 manyear task so the management declines.

At that point he decides to do the changes in gradual manner, completely ignoring the fact that it's still a 10 manyear task, while, realistically, his team can spend 2 manmonths a year on refactoring. Thus, the re-write will be accomplished in 60 years.

The same pattern repeats with the next project lead and so on.

@Eric Smith - I think the challenge with exclusively focusing on "value" is that the customer will ALWAYS pick "quick & dirty but delivers sooner". Quick wears off pretty fast, and all that's left is dirty.

This repeats until "quick" literally *isn't* possible anymore because of the accumulated cruft, at which point the client goes and finds a new team who is "way better at software than the last one".

I was once on a project where the data access approach changed midway through v1 development (ORM to dapper-esque). Even before anything had got into production, it already had this pattern.

And 2 ioc containers.

It was an agile project, so it must have been ok. Right?

Mike, thank you for writing this. I always share with my coworkers that a philosophy I have is consistent over "right." Developers always want to write and rewrite the " right" way. However the "right" way is only good until someone finds a new "right" way. At least if the code is consistent future devs can wrap their heads around it. They may not like it, but the chance that they can at least comprehend it is greater.

Everyone always argues "we'll refactor it later." No you won't. You never will. And the guy who comes after you won't either. I've been at this game far too long to believe that there will ever be a wholesale refactoring of a moderately large system. It doesn't make sense, no one will ever foot the bill for it.

Consistency is king, especially when it comes to comprehension. Thanks again.

Such a cool thread. Taking the fiction a step further, if I was the next guy to support the system, this is what I would do:

first - focus focus focus on understanding and minimising those endpoints where other systems are directly accessing the WidgetFinders database.

next - develop the next layer, again using current technology, and describe it to the business as a 'free app on your mobile' to track the assets. Crucially, make sure the app uses the same endpoints as the legacies.

then - start populating the back end database with wider enterprise data, and watch the app become the access method of choice for the whole business

What I'd *really* like to hear would be solutions to how to solve this problem of multiple approaches over time muddying the code-base, or better yet, how do we prevent this from happening in the first place?

You use OO principles and stick to one language. You don't even need an "OO" language to do this -- even in plain C you can do this, C89 from 25 years ago.

When a new approach appears that is better/required, you implement a compatible class/backend that uses that approach.

You never tie yourself to any particular implementation, but intentionally allow multiple implementations to be switched easily at run-time with no code changes.

This rests on 2 things:

1) choose one language/framework for a project, and stick to it;

2) developers do not hard-code things, everything is written in an OO fashion from day 1; even stuff that is OS/CPU/project specific, should be "under" or "implement" some other higher-level class/interface/API that already exists; if no such interface exists, you write that first, and THEN do your one-off implementation, so you can easily switch it out later if need be, or extend it/inherit from it inside another class, etc.

1) means if there is some new language/framework that has feature X and you really need it, you do the work and port/implement it using the language/framework you are already using, instead of saying "lets just switch all our code to the new language/framework Y!"; open source or in-house frameworks help here, where you have full control from top (your applications) to bottom (the libraries and perhaps even compiler/interpreter/run-time you use)

2) is not hard, but rarely done in practice, in my experience; most places PREFER proprietary, unflexible, hard-coded things, they see this as "added value" instead of "cornering yourself"; they see tight coupling as "value" and something competitors do not have

This covers "code" -- you still have to use data/databases/file formats that make sense and are "extendable" as needed, or at least documented/consistent so you can translate as needed.

Making code reusable and keeping it clean is very easy, just requires discipline.

Unfortunately, it is "easier" to make a mess in the short term.

"don't be lazy" but try telling that to the bean counters.

Small organizations such things can be forgiven, you need to get to market.

Large, global, BILLION dollar corporations? Inexcusable, pure greed/laziness and nothing more.

'too many cooks' also comes into play. There is nothing inherently bad about many people on a project...but it does affect consistency and readability...small teams theoretically do better here, but again, discipline is needed.

self-discipline, more than anything. organizations do not care, or even if they did, working somewhere that micromanages every detail is no fun; you need developers who micromanage themselves when appropriate, and are disciplined enough to make well-thought-out changes in a sane manner.

as much blame can go to management "I need it NOW!" or sales or financial markets...developers really should know better.

computers/hardware have only gotten faster...whatever machine you are on, they would KILL for in the 80s, they would commit GENOCIDE for in the 1960s for that computing power...there is no excuse, just laziness.

we are supposed to be more productive with all this power at our fingertips...technology is supposed to free our time up, give us more leisure time...if anything, we should have the luxury to be MORE PATIENT and carefully reason things out than developers 10, 20, 50 years ago...why is this is not occurring? markets? management? customers? developers are just lazy or always in a rush?

I've seen this pattern all too many times, but I think that the "pathologies" listed at the end are an attempt to rationalize it, by blaming the victim.

The prerequisite for all of this is high turnover. Turnover didn't exacerbate the problem; it caused it. Systems with a BDFL don't suffer from this problem. When management gave the workers poor working conditions, and inadequate budget for their needs, they left for greener pastures, and a new group of engineers was brought in. It's a miracle the software in this story shipped at all. The more common result is cancellation.

The Lava Layer is in no way symptomatic of the alleged "pathologies" of programmers. In any other field of work, firing the leader and hiring a new one halfway through the project yields exactly the same results. You see it in music, and writing, and theatre, and every other field you can imagine.

For a classic example of this (and to demonstrate that it's not at all new), watch "Excalibur". The filmmakers did the best with the material they had, perhaps, but you can tell when they had to switch from the early pagan stories to the later Christian stories, because the plot takes a definite turn for the weird. Ebert called it both "a wondrous vision" and "a mess".

You wouldn't have even heard of the Bent Pyramid if they'd decided to try razing the site and starting over, rather than making a Lava Layer out of it. To ask engineers to deal with these situations is to ask them to look beyond their own employment. It's gumption, not shortsightedness, that makes engineers overestimate this. Nobody wants to admit that a project is so bad they'll be gone in a year -- if they did, they wouldn't take the project in the first place.

It's simple to avoid the Lava Layer: work at a company that treats lead engineers so well they never want to leave. Google is famous for having super clean code. I believe this, more than the free laundry service, is what's keeping their top engineers from even considering looking elsewhere. Unfortunately, it's not a problem that can be solved at the engineering level, because it's not a problem which was created at the engineering level.

The Lava Layer is one instance of Conway's Law (1968): organizations which design systems are constrained to produce designs which are copies of the communication structures of these organizations. This particular instance is "high turnover", implemented in software.

The way I deal with 10-year-old+ legacy is that I take the time to understand it. I then fix bugs while being careful to minimize impact. I occasionally refactor small subsets of functions if I see tangible value to doing it and if the risk is low.

I rarely rewrite or refactor big chunks. That usually happens when the code is actually impossible to modify to accommodate the new requirements. Only in these extremely rare cases is it suitable to make big changes.

Most people start rewriting code when they hit "difficult".

It takes a lot of patience and empathy to deal with legacy. From what I see in the industry, legacy maintenance is not for everyone.

I really don't like this sentence:

"it doesn't matter if a developer leaves and is replaced, because anyone else in the team can pick up and carry on"

Maybe not the changed technology is the biggest problem, but the exchange in staff without proper handing over design and ideas behind the code.

Nevertheless it's a tough question how to innovate the technical layer. Either you gradually improve things or you need to have a big bang project someday.

Let's face it: most systems have a lifetime of maybe 6-8 years. Afterwards they are plain legacy. Sometimes this is acceptable and sometimes not.

Wow please shrink your navigation area on the left. It's so wide, the article text starts in the middle of my screen.

Very awkward to read... (using Chrome on Windows)

1. in Agile shops each sprint defines some work to be done, and a velocity calculated to show success rate.

2. Business/Management add requirements to the input funnel and are often resistant to refactoring as unproductive [as stated]

3. the dev team need to [be allowed] to add refactoring (say 15%) inputs

4. team need to vote on priority of what to refactor [in advance]

5. at least codebase and team progress shouldn't be hijacked by lone RDD developer

"If you find yourself suggesting a radical change to an existing application, especially if you use the argument that, “we will refactor it to the new pattern over time.”"

I think the big piece that is missing is that this is basically the opposite of the strategy suggested by Fowler in "Refactoring", which is roughly: when faced with a difficult task, first refactor to make it an easy task, and then do the easy task.

That is, you're supposed to do the refactoring first. Then you never end up with multiple incompatible layers. You can also see up front whether your new approach is actually better than the old one, before you go to the effort to build anything new with it.

The problem with inventing a new system isn't the new system. New systems are often much better than old ones! The problem is only when you don't actually replace anything, but simply downgrade the entire working system to "tech debt" status, with no plans to do anything about that new classification.

I see two problems, both with the example you discuss here and samples of the Lava Flow anti-pattern that I've worked on in the past: 1) There doesn't seem to be any abstraction layer (especially in Laurence's first draft where the RecordSet is being passed up out of the data layer) which makes any effort to rewrite or improve the situation intractable and 2) People say "we'll update the old code as we go" but they don't follow through with that. You can't say that you'll update the old code and then tell other developers "don't touch that old stuff because you don't want to break it.

The best cure for these kinds of problems is prevention: Use a strong abstraction layer and isolate the dependency on the DB so that the data layer can evolve separately from the business logic, with a minimum of effort. Also, use sufficient automated testing or verification to ensure that changes to the data layer internals don't create new bugs. Once you have these things in place, evolutionary updates to the data layer internals can proceed with confidence and relative ease.

So the problem I have with your point is that the developers involved made a promise ("we'll update the old stuff as we go") and they didn't follow through with that, leaving incomplete work. There's nothing wrong with a rewrite so long as you follow through with it, and that requires sufficient planning and resource allocation to make sure you can complete the task. If you don't have buy-in from management and can't allocate sufficient resources to complete it, you shouldn't start it. If you do have those things, and the will to do it, then there's no problem with it.

Is the fundamental problem here one of estimation, where the developers are under-estimating the amount of work it will take to bring the rest of the system up to modernity? We know that, historically, developers have been quite bad at estimation.

Also, I have a question about the later teams. Were they able to be more productive by using more modern technologies, at least for their parts? If they are more productive on the parts of code which are actively changing, and the older bits of code Just Work and don't need to be altered, isn't this an overall win from the business perspective?

One of the reasons I plan to stay at the company I am currently working for is because there are lots of technical people at management (that is, people promoted from within instead of being a hired MBA), which means that when some pimply-faced youth recommends we rewrite our mature, stable, highly-performant but legacy codebase from scratch using Python and MongoDB or whatever, he is politely declined because the technical people know what this entails. And you know what, our "legacy code" is maintainable and keeps customers happy because of that. Even new projects are required to use technologies that have been assessed by technical management (instead of developer leads promoting their pet language). And this is the reason the company can afford to train every new recruit on the technology our codebase uses.

Everything old is new again.

See Chesterson's Fence from his 1929 book The Thing:

"In the matter of reforming things, as distinct from deforming them, there is one plain and simple principle; a principle which will probably be called a paradox. There exists in such a case a certain institution or law; let us say, for the sake of simplicity, a fence or gate erected across a road. The more modern type of reformer goes gaily up to it and says, "I don't see the use of this; let us clear it away." To which the more intelligent type of reformer will do well to answer: "If you don't see the use of it, I certainly won't let you clear it away. Go away and think. Then, when you can come back and tell me that you do see the use of it, I may allow you to destroy it."

Cheers.

Oops, that should be "G. K. Chesterton", not "Chesterson". :/

Post a Comment